Yao, A.C.: Protocols for secure computations. Stutzbach, D., Rejaie, R., Duffield, N., Sen, S., Willinger, W.: On unbiased sampling for unstructured peer-to-peer networks. In: Italiano, G.F., Margaria-Steffen, T., Pokorný, J., Quisquater, J.-J., Wattenhofer, R. Saia, J., Zamani, M.: Recent results in scalable multi-party computation. In: Proceedings of the 13th IEEE International Conference on Peer-to-Peer Computing (P2P 2013). Roverso, R., Dowling, J., Jelasity, M.: Through the wormhole: Low cost, fresh peer sampling for the internet. In: JMLR Workshop and Conference Proceedings of AISTATS 2012, vol. 22, pp. Rajkumar, A., Agarwal, S.: A differentially private stochastic gradient descent algorithm for multiparty classification. Paillier, P.: Public-key cryptosystems based on composite degree residuosity classes.

Concurrency and Computation: Practice and Experience 25(4), 556–571 (2013) Ormándi, R., Hegedűs, I., Jelasity, M.: Gossip learning with linear models on fully distributed data. Naranjo, J.A.M., Casado, L.G., Jelasity, M.: Asynchronous privacy-preserving iterative computation on peer-to-peer networks.

Maurer, U.: Secure multi-party computation made simple. In: Proceedings of the 26th Annual International Conference on Machine Learning (ICML 2009), pp. Ma, J., Saul, L.K., Savage, S., Voelker, G.M.: Identifying suspicious URLs: an application of large-scale online learning. Lichman, M.: UCI machine learning repository (2013), Jesi, G.P., Montresor, A., van Steen, M.: Secure peer sampling. IEEE Transactions on Knowledge and Data Engineering 22(6), 884–899 (2010)

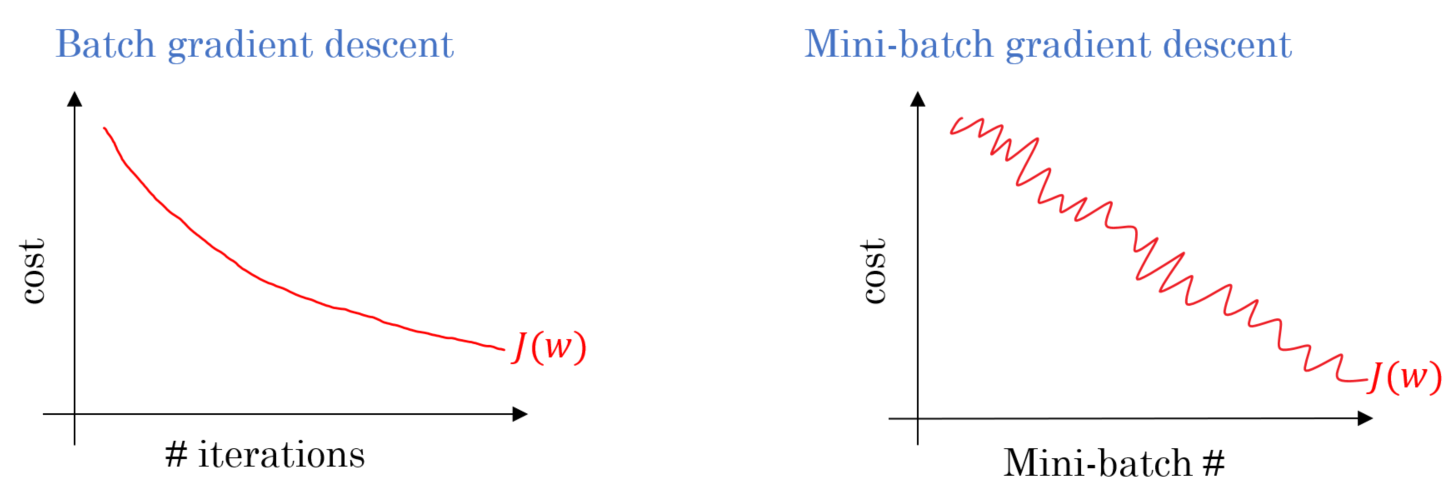

Han, S., Ng, W.K., Wan, L., Lee, V.C.S.: Privacy-preserving gradient-descent methods. Association for Computational Linguistics, Stroudsburg (2010) In: Proceedings of the Fourteenth Conference on Computational Natural Language Learning (CoNLL 2010), pp. Gimpel, K., Das, D., Smith, N.A.: Distributed asynchronous online learning for natural language processing. ACM 54(1), 86–95 (2011)ĭwork, C., Kenthapadi, K., McSherry, F., Mironov, I., Naor, M.: Our data, ourselves: Privacy via distributed noise generation. Newsl. 4(2), 28–34 (2002)ĭekel, O., Gilad-Bachrach, R., Shamir, O., Xiao, L.: Optimal distributed online prediction using mini-batches. (eds.) Advances in Neural Information Processing Systems 16, MIT Press, Cambridge (2004)Ĭlifton, C., Kantarcioglu, M., Vaidya, J., Lin, X., Zhu, M.Y.: Tools for privacy preserving distributed data mining. Springer, Heidelberg (2012)īottou, L., LeCun, Y.: Large scale online learning. (eds.) Neural Networks: Tricks of the Trade, 2nd edn. In: Montavon, G., Orr, G.B., Müller, K.-R. Springer (2006)īottou, L.: Stochastic gradient descent tricks. Peer-to-Peer Networking and Applications 3(2), 129–144 (2010)īishop, C.M.: Pattern Recognition and Machine Learning. IEEE (2014)īickson, D., Reinman, T., Dolev, D., Pinkas, B.: Peer-to-peer secure multi-party numerical computation facing malicious adversaries. In: Proceedings of the 14th IEEE International Conference on Peer-to-Peer Computing (P2P 2014). In: IEEE Global Telecommunications Conference (GLOBECOM 2006) (2006)īerta, Á., Bilicki, V., Jelasity, M.: Defining and understanding smartphone churn over the internet: A measurement study. KeywordsĪhmad, W., Khokhar, A.: Secure aggregation in large scale overlay networks. We derive a sufficient condition for preventing the leakage of an individual value, and we demonstrate the feasibility of the overhead of the protocol. We analyze the protocol theoretically as well as experimentally based on churn statistics from a real smartphone trace. In other words, speed and robustness takes precedence over precision. The protocol achieves scalability and robustness by exploiting the fact that in this application domain a “quick and dirty” sum computation is acceptable. We apply this protocol to efficiently calculate the sum of gradients as part of a fully distributed mini-batch stochastic gradient descent algorithm. During the computation the participants learn no individual values. We propose a light-weight protocol to quickly and securely compute the sum of the inputs of a subset of participants assuming a semi-honest adversary. However, in our application domain, known MPC algorithms are not scalable or not robust enough. These issues are often dealt with using secure multiparty computation (MPC). In fully distributed machine learning, privacy and security are important issues.

0 kommentar(er)

0 kommentar(er)